Despite the constant #mainstreaming hype, the branding, and the trillions of dollars being poured into it, there is a simple reality that needs to be stated plainly: There is no intelligence in current “AI”, and there is no working path from today’s Large Language Models (#LLM) and Machine Learning (#ML) systems to anything resembling real, general intelligence.

What we are living through is not an intelligence revolution, it is a bubble – one we’ve seen many times before. The problem with this recurring mess is social, as a functioning democracy depends on the free flow of information. At its core, democracy is an information system, shared agreement that knowledge flows outward, to inform debate, shape collective decisions, and enable dissent. The wisdom of the many is meant to constrain the power of the few.

Over recent decades, we have done the opposite. We built ever more legal and digital locks to consolidate power in the hands of gatekeepers. Academic research, public data, scientific knowledge, and cultural memory have been locked behind paywalls and proprietary #dotcons platforms. The raw materials of our shared understanding, often created with public funding, have been enclosed, monetised, and sold back to the public for profit.

Now comes the next inversion. Under the banner of so-called #AI “training”, that same locked up knowledge has been handed wholesale to machines owned by a small number of corporations. These firms harvest, recombine, and extract value from it, while returning nothing to the commons. This is not a path to liberal “innovation”. It is the construction of anti-democratic, authoritarian power – and we do need to say this plainly.

A democracy that defers its knowledge to privately controlled algorithms becomes a spectator to its own already shaky governance. Knowledge is a public good, or democracy fails even harder than it already is.

Instead of knowledge flowing to the people, it flows upward into opaque black boxes. These closed custodians decide what is visible, what is profitable, and increasingly, what is treated as “truth”. This enclosure stacks neatly on top of twenty years of #dotcons social-control technologies, adding yet more layers of #techshit that we now need to compost.

Like the #dotcons before it, this was never really about copyright or efficiency. It is about whether knowledge is governed by openness or corporate capture, and therefore who knowledge is for. Knowledge is a #KISS prerequisite for any democratic path. A society cannot meaningfully debate science, policy, or justice if information is hidden behind paywalls and filtered through proprietary systems.

If we allow AI corporations to profit from mass appropriation of public knowledge while claiming immunity from accountability, we are choosing a future where access to understanding is governed by corporate power rather than democratic values.

How we treat knowledge – who can access it, who can build on it, and who is punished for sharing it – has become a direct test of our democratic commitments. We should be honest about what our current choices say about us in this ongoing mess.

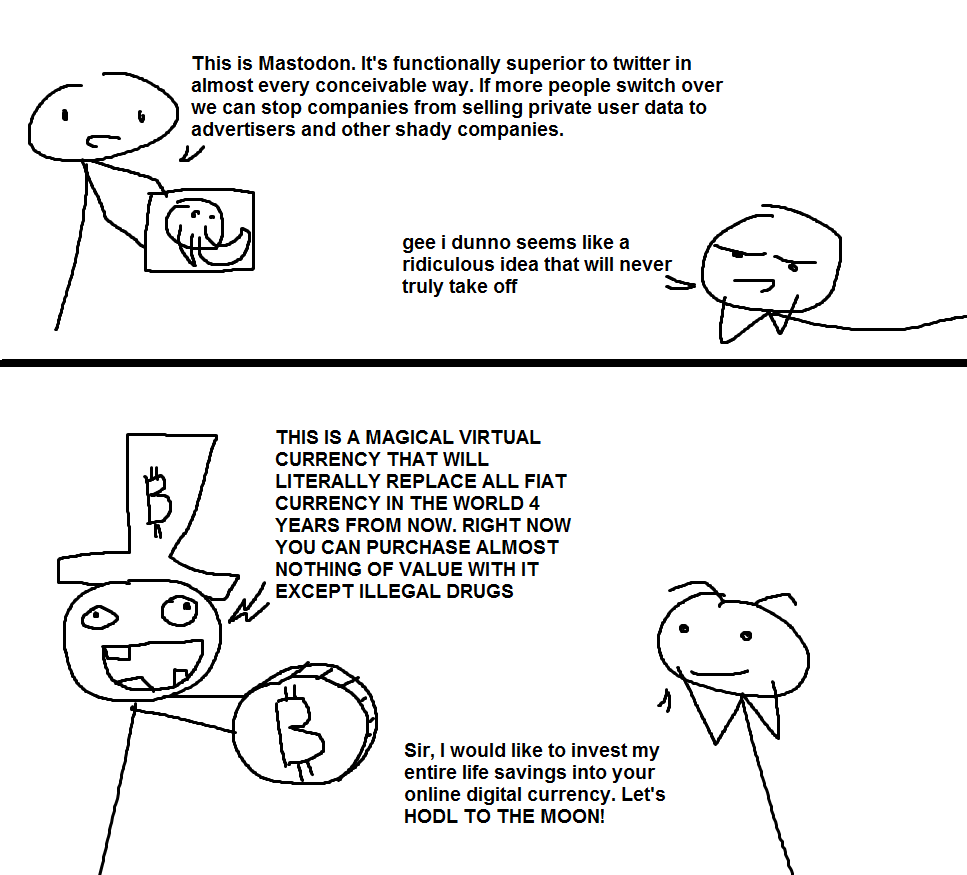

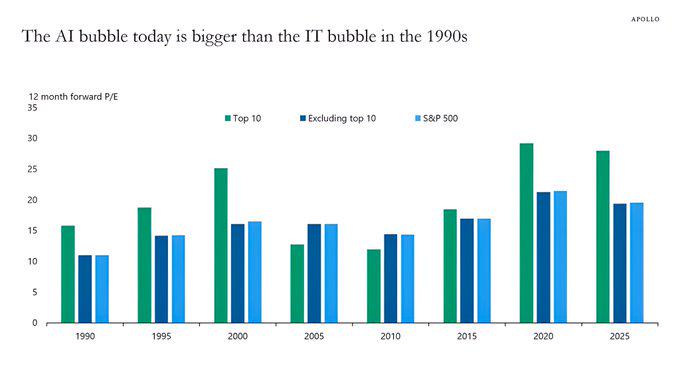

The uncomfortable technical truth is this: general #AI is not going to emerge from current #LLM and ML systems – regardless of scale, compute, or investment. This has serious consequences. There is no coming step-change toward the “innovation” promised to investors, politicians, and corporate strategists, now or in any foreseeable future. The economic bubble beneath the hype matters because AI is currently propping up a fragile, fantasy economic reality. The return-on-investment investors are desperate for simply is not there.

So-called “AI agents”, beyond trivial and tightly constrained tasks, will default to being just more #dotcons tools of algorithmic control. Beyond that, thanks to the #geekproblem, they represent an escalating security nightmare, one in which attackers will always have the advantage over defenders, this #mainstreaming arms race will be endless and structurally unwinnable.

Yes, current #LLM systems do have useful applications, but they are narrow, specific, and limited. They do not justify the scale of capital being burned. There are no general-purpose deliverables coming to support the hype. At some point, the bubble will end – by explosion, implosion, or slow deflation.

What we can already predict, especially in the era of #climatechaos, is the lost opportunity cost. Vast financial, human, and institutional resources are being misallocated. When this collapses, the tech sector will be even more misshapen, and history suggests it will not be kind to workers, let alone the environment. This is the same old #deathcult pattern: speculation, enclosure, damage, and denial.

This moment is not about being “pro” or “anti” technology. It is about recognising that intelligence is social, contextual, embodied, and collective – and that no amount of #geekproblem statistical pattern-matching can replace that. It is about understanding that democracy cannot survive when knowledge is enclosed and mediated by #dotcons corporate capture beyond meaningful public control.

To recap: There is no intelligence in current #AI. There is no path to real AI from here. Pretending otherwise is not innovation – it is denial, producing yet more #techshit that we will eventually have to compost. Any sophist that argue otherwise need to be sacked if they arnt doing anything practical.

The only question is whether we use this moment to rebuild knowledge as a public good – or allow one more enclosure to harden around us. History – if it continues – will not be neutral about the answer.