We need a better conversation about ideology, sociology, and the #openweb. A good place to start is with a basic liberal framework: “Most social interactions should happen in the open. Some personal interactions should remain private.” Seems reasonable, right? That’s the position many of us think we agree on. Yet when we look at how our technology, and by extension, our society, is being built, that balance is totally out of whack. Today, more and more of life is CLOSED:

Closed apps.

Closed data.

Closed social groups.

Closed algorithms.

Closed hardware.

Closed governance.And on the flip side, the things that should be protected, our intimate conversations, our location, our health data, are often wide open to surveillance capitalism and state control. What the current “common sense” dogma gets wrong? What is missing from the #mainstreaming tech culture, privacy absolutists, and many crypto/anarchist types:

Almost all good social power comes from OPEN.

Most social evils take root in CLOSED spaces.When people organize together in the open, they create commons, accountability, and momentum. They make movements. When decisions are made behind closed doors, they breed conspiracy, hierarchy, abuse, and alienation.

It’s not just about what is open or closed, it’s about who controls the boundary, and what happens on each side. If we close everything… If we follow the logic of total lockdown, of defaulting to encryption, of mistrust-by-design… then what we’re left with is only the closed. This leads to a brutal truth, the powers that dominate in closed systems are rarely the good ones. Secrecy benefits the powerful far more than the powerless. Always has.

So when we let the #openweb collapse and treat it as naive, we’re not protecting ourselves. We’re giving up the last space where power might be accountable, where ideas might circulate freely, where we might build something together.

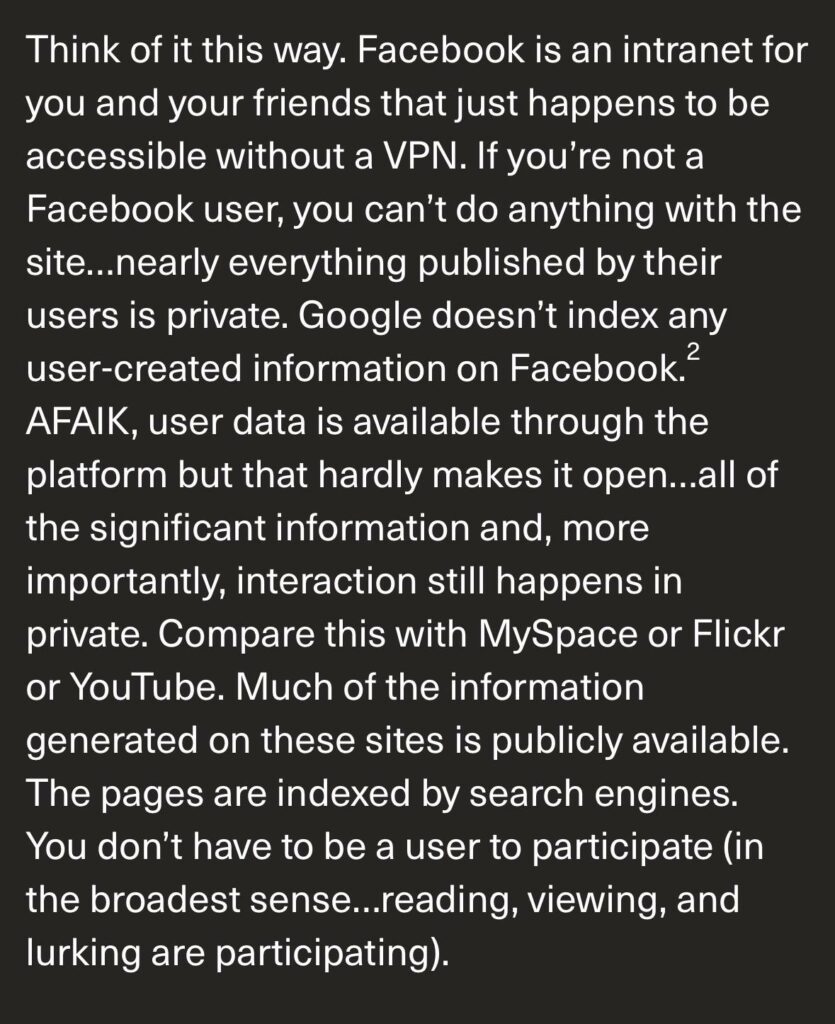

We can find examples of when openness was lost. Let’s talk about a real-world case of #Diaspora vs. #RSS. 15 years ago, Diaspora emerged with crypto-anarchist hype as the alternative to Facebook. It was secure, decentralized, and… mostly closed. It emphasized encryption and privacy, but lacked network effects, openness, and simple flows of information.

In the same era, we already had #RSS, a beautifully open, #KISS decentralized protocol. It powered blogs, podcasts, news aggregators, without permission or centralized control. But the “Young #fashionistas ” of the scene shouted down RSS as old, irrelevant, and too “open.” They wanted to start fresh, with new protocols, new silos, new power. They abandoned the working #openweb to build “secure” ghost towns.

Fast-forward a decade, and now we’re rebuilding in the Fediverse with RSS+ as #ActivityPub. The same functionality. The same ideals, just more code and more complexity. That 10-year gap is damage caused by the #geekproblem, the failure to build with the past, and for real people.

So what is the #geekproblem? At root, it’s a worldview issue. A failure to think about human beings in real social contexts. Geeks (broadly speaking) assume:

- People are adversaries or threats (thus: encrypt everything),

- Centralization is evil, but decentralization is always pure (thus: build silos of one),

- Social complexity can be reduced to elegant protocols (thus: design first, use later).

- But technology isn’t neutral. It reflects ideologies. And if we don’t name those ideologies, they drive the project blindly.

A place to start is to map your ideology, want to understand how you think about openness vs. closeness? Start by reflecting on where you sit ideologically, not in labels, but in instincts. A quick sketch:

Conservatism: Assumes order, tradition, and authority are necessary. Values stability, hierarchy, and often privacy.

Liberalism: Believes in open society, individual freedom, transparency, and market-based solutions.

Anarchism: Rejects imposed authority, promotes mutual aid, horizontal structures, and often radical openness.None of these are “right,” but understanding where you lean helps clarify why you walk, build or support certain tools. And if you say you’re building tools for the #openweb, these questions matter:

Do you default to closed and secure, or open and messy?

Who do you trust with knowledge—individuals or communities?

Do you believe good things come from control, or emergence?These are sociological questions, not just technical ones, maybe start here: https://en.wikipedia.org/wiki/Sociology and https://en.wikipedia.org/wiki/List_of_political_ideologies.

Where do we go from here? Let’s bring this back to the openweb and the projects we’re trying to build, like:

#OMN (Open Media Network)

#MakingHistory

#indymediaback

#Fediverse

#P2P tools (DAT, Nostr, SSB, etc.)All of these projects struggle with the tension between openness and privacy, between usability and purity, between federation and anarchy. But if we start with clear values, and an honest reflection on the world we want to create, we can avoid the worst traps. Let’s say it plainly:

Not everything should be open. But if we close everything, we lose what’s worth protecting.Let’s talk: What do you think should be closed? What must be kept open at all costs? What’s your ideological instinct, and how does it shape your view of the #openweb?

Interesting look at a #4opens project notice “”Strict scrutiny” means that any measures instituted for security must address a compelling community interest, and must be narrowly tailored to achieve that objective and no other. ” We have come a long way from this with our #encryptionsist agenders.